“If you document the moment something is created, and you document how it evolves, you never need to guess. You can show the world your work.”

Chris Young

Origins

The beginnings of Authentifi AI did not unfold in a moment of crisis or sudden revelation. Instead, the idea emerged gradually, shaped by decades of experience in technology and a changing digital landscape that was quietly shifting the once static ground beneath everyone’s feet. Artificial intelligence was accelerating at a pace few could fully grasp. Generative models were learning to write, imitate, and synthesize with uncanny fluency. The world was celebrating the convenience, but beneath that excitement lived a growing uncertainty. People were losing sight of their own creative fingerprints.

For most observers, and even direct users, this transformation felt disjointed and abstract. For Chris Young, it was impossible to ignore.

Chris is a serial entrepreneur and tech innovator, with a long history of building emerging technologies. Years before Authentifi AI, he developed an online marketplace for used textbooks, an online auction platform, and a musical artist database system that forged the direction of his future digital innovation. This unique background gave him a rare vantage point and shaped his instincts for identifying unrealized needs in technological ecosystems. He understood how digital cultures evolve, how users shape technologies they adopt, and how gaps always emerge when innovation moves faster than the structures meant to support it.

Reflecting on that early stage, he described a persistent and unsettling realization.

“People were getting lost in the noise around AI,” he said. “Everyone was asking how to tell if something was written by a machine, but no one was asking how to prove that something was written by a person.”

That difference was not semantic. It was the entire foundation of Authentifi AI.

Chris saw these early generative systems imitating human expression with increasing accuracy. Essays, emails, poems, reports, and entire applications could be drafted with little effort. The question was not whether machines would learn to mimic human work. They already had. The question was how individuals would protect their authenticity in a world where imitation is effortless.

“I kept thinking that the answer had been in front of us the whole time,” Chris said. “If you document the moment something is created, and you document how it evolves, you never need to guess. You can show the world your work.”

In a digital age fascinated by detection, Chris chose to focus on verification.

He did not want to police creativity. He wanted to preserve it.

The Problem of Authorship

The challenge of authorship is older than artificial intelligence. The value of creative work has always depended on trust. The reader trusts the writer. The professor trusts the student. The publisher trusts the researcher. The employer trusts the staff member who submits a report. This trust has traditionally been supported by context and expectation rather than proof.

Artificial intelligence disrupted that structure immediately.

Suddenly, anyone could produce content indistinguishable from human work, and detection systems rushed, and failed, to meet the moment. They tried to classify writing as machine generated or human generated using probability models. These attempts were well intentioned, but deeply flawed.

Chris summarized the issue with clarity.

“A model that tries to detect AI is fighting against the very thing that created it. If you train a system to catch bad actors, bad actors will train systems to evade detection. It becomes an arms race that no one wins.”

Detection was always going to lose. It was the wrong approach to the wrong question.

Authentifi AI answered the correct question instead. Not “Did a machine write this” but “Can a person prove they wrote this.”

This shift reframed the entire landscape. Authentifi provides creators with a timestamped, immutable record that captures each stage of their work. A piece of writing is not verified by analyzing the text, but by tracking its evolution. Revision history, draft progression, and key metadata form a chain of provenance.

Chris often returns to the same explanation when describing the heart of the system.

“Mistakes matter. Revisions matter. The pauses matter. All the things we try to hide in the creative process are the very things that prove we made something. The goal is to make authorship universal and effortless.”

This speaks to the humanity of creation. A machine can generate a polished paragraph in an instant, but it cannot recreate the path a person took to arrive there. It cannot replicate research, hesitation, or the moment a draft takes a new direction, or the subtle shift when a writer understands what they meant only after writing it.

Authentifi captures those moments.

It preserves the process, and in doing so preserves and validates the creator.

Academic Trust

The academic world felt the impact of artificial intelligence earlier and more intensely than most fields. Students became early adopters of generative tools, sometimes innocently, sometimes impulsively, and sometimes in ways that undermined learning and academic integrity.

Not coincidentally, accusations of misconduct began to rise. Students who did their own work found themselves flagged by unreliable detectors. Faculty felt unprepared, unsure how to evaluate authenticity in a rapidly changing environment. Detection tools produced false positives, which demonstrably lead to unfounded accusations, and entire classrooms wondered whether trust could survive the technological shift.

Chris understood this tension.

“In education, trust has become fragile,” he said. “Students are worried about being accused of cheating, and educators are worried about missing misconduct. We can fix both problems by documenting creation instead of hunting for truth after the fact.”

Authentifi plans to become a bridge.

Standard plagiarism and AI detection continues to struggle with accuracy, and its failures carry consequences that extend well beyond the classroom. For students, the risk is not only academic but reputational, with the possibility of disciplinary action or legal challenge resting on unreliable determinations. This risk is especially acute for students for whom English is not a first language. Many rely on language models not to generate ideas, but to translate their own thinking into clearer, more conventional academic English. In these cases, the work is entirely theirs, but the voice does not initially align with institutional expectations. Without a way to document authorship, students often feel compelled to defend themselves repeatedly, explaining their linguistic background and writing process to each instructor. Authentifi addresses this inequity by preserving the evolution of the work itself, allowing students to demonstrate ownership without justification or explanation. By documenting how ideas take shape rather than judging the final form alone, the platform shifts the conversation away from suspicion and toward legitimizing usage.

A student opens a blank document and starts writing. Authentifi quietly logs the development of the work. As the student revises, expands, restructures, or rethinks, the system preserves each stage. There is no surveillance. No judgment. No score. No algorithm guessing what is or is not human. Instead, the student gains a shield. If challenged, they can show their process. If not challenged, Authentifi remains invisible.

Academia noticed immediately; conversations with educators, assessment professionals, and academic stakeholders highlight a growing uncertainty around authorship in AI influenced environments and reinforced the need for systems that document human creation and AI-human co-creation without eroding trust.

They had long struggled with the rise of digital shortcuts and the difficulty of validating genuine work. Authentifi contends to offer an elegant solution grounded not in suspicion but in incontestable documentation.

Chris describes an early exchange with Dr. Vinod Ahuja of FGCU, whose interest sharpened as Authentifi’s approach to metadata based authentication came into focus. “He said he had a machine learning course coming up and asked if we wanted to test our thesis in that environment. Once we received approval, we were able to take a controlled data set and run it through different models like neural networks and random forest approaches, using metadata rather than content to see what patterns emerged.”

Chris explains that the goal is not to generate an answer, but to weight variables associated with human authorship. “You start looking at things like trust indicators and behavioral signals and assigning weight to those variables. That is where machine learning becomes useful.” The limitation, he notes, is that early work relies on static and synthetic data. “Without real time student data, the models struggle to reconcile what is human and what is machine. That is why the next phase matters.”

He describes the outcome as foundational rather than conclusive. “Students wrote papers on which approach showed the most promise for reaching an authentication score that reflects how much of the final work came from the student. Now the focus is on bringing that into a live classroom environment. That is the next step. That is where this becomes real.”

Chris put it succinctly.

“I think the deeper meaning is this paradigm shift of learning, where we actually, through inference with the machines, are able to become faster, smarter, better as humans, because we have access to the entirety of archived information. You can be laser focused, and you can get necessary information and narrow it down and bring it in, and then start using it right away. And so that was the biggest, biggest, light bulb for me. We are not trying to catch people. We are trying to protect them. When you protect the honest student, you protect the integrity of the entire system.”

Authentifi aligns naturally with long-standing principles of educational fairness. It reduces stress for students, creates clarity for faculty, and plans to reshape conversations around academic integrity. Instead of policing misconduct, it enables the creator to demonstrate authorship. This is not a monitoring or detection system but a transparency system. It does not analyze content for patterns or anomalies. It verifies creation by maintaining a secure trail of drafts, revisions, and metadata that connects the work to its human source.

Authentifi is not designed to not solve a cheating problem. It will solve a trust problem.

The Technology

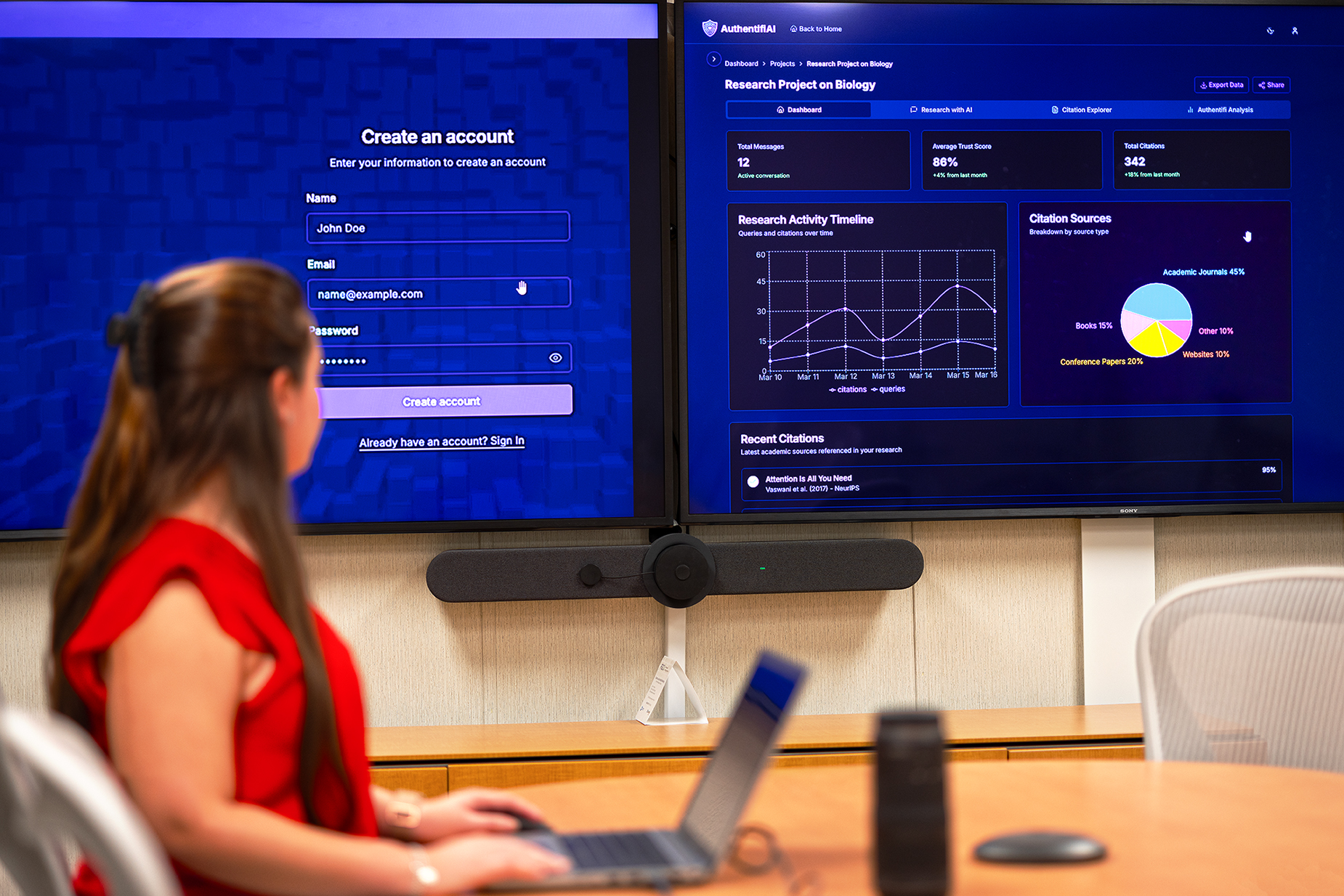

Although Authentifi’s interface is simple, the underlying technology reflects decades of insight.

At the core, Authentifi creates an immutable record of a work’s evolution. Each keystroke is not logged, but key changes are timestamped, hashed, and preserved. These records form a sequential real-time chain that cannot be altered retroactively. If the creator edits a paragraph, the system preserves both states. If they delete a section, the record remains. Authentifi captures the rhythm of writing.

To explain this, Chris often uses a metaphor.

“Our system is like a camera pointed at creativity. It does not judge what it sees. It simply records the moment light hits the lens. The records are immutable, they’re timestamped, they’re non-breakable, they’re encrypted. We’re using kind of a blockchain meets LLM framework.”

Metadata is not a barrier to privacy. It is a verification tool. Students and creators do not surrender their content to Authentifi. They retain full ownership. Authentifi stores only the minimal data necessary to confirm authenticity. This makes it fundamentally different from systems that collect entire documents for analysis.

Chris considers this essential.

“We are not in the business of harvesting content or intellectual properties. Our job is to protect your work, not collect it. That was a foundational non-negotiable principle from the beginning.”

The platform’s planned architecture supports a wide range of users, from students to journalists to researchers. Its ability to capture the evolution of a document makes it especially valuable for long form writing, scientific manuscripts, legal drafts, and professional reports where provenance is essential.

The technology builds confidence without creating dependence.

“We want Authentifi to function behind the scenes,” Chris said. “You do not have to write differently. You do not have to learn a new workflow. You create, and we protect.”

This design choice aligns with an emerging digital rights movement centered on self-sovereignty. The concept suggests that individuals should have ownership and agency over their digital work This simplicity is intentional. Creativity should not feel monitored. It should feel supported.

SBDC Guidance

As Authentifi began to grow, Chris found himself balancing technical development with the complexities of building a scalable business. He had the vision and the technical foundation, but growth requires coherence, strategic alignment, and perspective from outside the founder’s mind.

The Florida Small Business Development Center at Florida Gulf Coast University became a critical partner in this phase. SBDC consultant Heidi Cramer provided direction that helped shape the company’s identity, messaging, and strategic pathway.

“Heidi helped me put language to what the company was really doing,” Chris said. “She pushed me to define the human problem before the technical one. That changed how we talked about Authentifi. It made the message clear.”

Through the SBDC, Chris developed a sharper articulation of the problem Authentifi solves. The SBDC helped identify key markets, refine the pitch for educational institutions, and create a structure that allowed the technology to scale without losing its purpose.

Chris often reflected on how the SBDC sharpened his trajectory.

“One of the best things the SBDC did was help me slow down long enough to aim properly. They helped me think about the order of operations. Higher education first, testing organizations next, then publishing, then enterprise. With their guidance, it became a business with a purpose, a direction, and a clear reason to exist.”

Heidi helped Chris refine presentations, anticipate objections, and craft responses grounded in educational and ethical precision. Her experience helped Authentifi anticipate how institutions interpret risk and how to communicate the platform’s benefits in terms familiar to decision makers.

The SBDC also connected Authentifi with faculty members and academic departments conducting research on authorship, assessment, and AI. These relationships provided essential feedback loops, giving Authentifi access to real use cases long before the broader public understood the implications of generative AI.

Chris emphasized the significance of this support.

“They did not just tell us what to do. They helped us recognize what we already had and how to express it. That kind of guidance is rare.”

AI Ethics and the Future of Authorship

The rise of artificial intelligence has amplified philosophical questions that have existed quietly for centuries. What does it mean to create something? When is a piece of writing truly yours? How much of the creative process must belong to a person for the result to be considered human?

“And I think the other magic part of this idea is the provenance type of technology, the authentication piece where we have now these immutable records that downstream and can be referenced for intellectual property. So think of research. Think of patents. Think of coding. Well, if you started with some kind of code already, and AI helps you boost it, as long as you’re tracking that process of how your input affected the output using the machines, you can prove that chain of thought and creation through our mid layer authentication audit trail. Then in the output, you can reference the whole thing and say, ‘compare it.’ So that’s the idea, perfect.”

These questions matter in every field where knowledge, art, and communication shape opportunity.

Chris approaches these issues with a measured calm.

“People are afraid of AI because they think it will erase them. I think Authentifi proves the opposite. When you preserve authorship, you preserve the person behind the work.”

He sees Authentifi not as a reaction to generative AI, but as the infrastructure needed to coexist and co-create with it. Generative models will continue to grow, adapt, and influence every industry. Their existence is not a threat, but their misuse can undermine trust if not supported by verification systems.

“A world without provenance is a world without accountability,” Chris said. “Creativity becomes anonymous, and that is not good for anyone.”

Authentifi does not prevent people from using AI tools. It does not interfere with workflows or limit innovation. It ensures that when a person wants to prove authorship, they can. When they choose to collaborate with AI, they can distinguish their contribution from the machine’s.

This approach positions Authentifi at the intersection of technology and ethics. It represents a future where creators, educators, and institutions can work confidently alongside artificial intelligence without sacrificing integrity.

Expansion into Broader Sectors

As Authentifi AI moves beyond its academic framing, conversations across multiple industries have revealed similar concerns around authorship and verification. Publishers raise questions about how manuscripts can be protected before submission. Corporate leaders point to the growing difficulty of validating internal reports and strategic documents. Legal professionals note the absence of reliable ways to establish document ancestry. Research organizations consider how scientific work might retain clear records of human authorship from first draft to final submission.

Rather than shifting its purpose, Authentifi adapts its framework to remain applicable across these environments while preserving its core function.

“We are not building different products for different industries,” Chris said. “We are building one foundation that works anywhere human creativity matters. If authorship can be verified, trust can exist.”

As these conversations expand, the Florida SBDC plays a critical role in helping Chris evaluate direction without overreach. Through structured guidance, the SBDC helps distinguish between sectors that are conceptually aligned with Authentifi’s mission, those that require deeper education, and those that will demand more deliberate timing.

“The SBDC keeps the company honest about where it is,” Chris said. “They help me separate interest from readiness. That discipline is the difference between building something sustainable and chasing every open door.”

The Future. . .

In Naples, where technology startups are less common than hospitality, real estate, or medical ventures, Authentifi AI occupies a particularly distinct space. It stands as evidence that transformative innovation can grow from any region where insight, technical understanding, and thoughtful support intersect.

As Chris looks ahead, he envisions Authentifi AI becoming a standard part of everyday digital life.

“In five years, people will stop asking whether something was written by AI. They will ask whether a person can prove they wrote it. We are building the answer to that question.”

Authentifi AI is more than a security measure. It is a cultural foundation. It protects not only the value of work, but the identity of the person who created it.

Chris described the mission with conviction.

“If we do this right, we make the world safer for creators. We make education fairer. We help people trust their own work again.”

Authentifi AI stands as a reminder that even as technology evolves, human creativity remains at the center of everything. The future belongs not to the systems that generate content, but to the people whose ideas continue to shape the world. Authentifi AI was built for this purpose, and through the guidance of the Florida SBDC at FGCU, it has found the structure and direction to advance that purpose at scale.

“The SBDC helped me turn a technical idea into a coherent business. They did not add noise. They added clarity. We are not trying to fight AI,” Chris plainly states. “We are trying to protect the people who use it. There is room for both. There has to be.”